By enshrining interventions that work for privileged people with straightforward illness, standardised care drives inequity.

I’ve always been uncomfortable with the idea that patients are “consumers”.

I understand why the community wanted a term that implied greater agency and power in the therapeutic relationship, although even before Google I knew that my patients with chronic disease often knew a lot more about their own illness than I did.

However, while there are undoubtedly people who are empowered enough to make their own decisions about their health, individually or in partnership with health professionals, some can’t. Like many of my colleagues, I have been sufficiently overwhelmed by illness as a carer or as a patient to turn to my GP and ask “what would you do if you were me?” Not every person is able to make an informed decision when they are sick, overwhelmed and frightened.

I am also humble enough, I hope, to realise I am influenced by marketing. As a “consumer”, I recognise that shiny packets make a difference. When my brain is not switched on, I am likely to pick a product that appeals to me for a whole range of reasons other than rational analysis of the situation, and I am an adult who can read. There are reasons we make such a fuss made about food labelling and advertising.

Data and its limits

Which brings me to the concept of “evidence”.

Numbers are seductive. They create the illusion of objectivity, imply precision and simulate truth. However, we know that data can be distorted, research can be biased and even in the best hands, statistics can be misleading. Sometimes unscrupulous operators use shiny numbers to deliberately influence consumer “choice”. The distortion of “evidence” is common in the primary care swamp.

Doctors, of course, should know this. There would be no need for a double-blind trial if data were objective. There would also be no need for conflicts-of-interest statements if any of us were capable of managing our own biases. We also know the difference between a true objective measure, like blood pressure, and a surrogate objective measure, like a pain scale. Yet the need for numbers through “data” is growing.

Marketing tactics in medical research

I am old enough to remember the drug company pens that infested my desk before we all recognised that these innocuous objects influenced our decision making. I also remember the gradual tightening of regulations around advertising alcohol and cigarettes, and why it was necessary. Throughout my career I have managed the fallout when patients have lost their health and their homes paying for expensive unregulated snake oil promising cures with pseudoscientific language they couldn’t understand.

So, I’m getting more and more angry with the marketing of mental health strategies that are tainted by self-interest, profit motives, ambition and commercial benefit. It doesn’t matter whether it is conscious, deliberate or intentional. What matters is the commercial capture of policy.

Drugs and devices are assessed independently by the Therapeutic Goods Administration. There is no equivalent for mental health interventions. There should be. But mental health interventions (apps, protocols, clinics, programs etc.) are funded by governments, recommended by governments and even mandated by governments. The same people design the research, receive the government grants, build the products, evaluate the products and then sit on the committees that mandate their use.

We don’t accept this with drugs and devices. We shouldn’t accept it with mental health.

Patient-centred, or research-centred care?

It is understandable that governments with a public health remit will aim for “the greatest good for the greatest number”. This means supporting interventions that provide the best average outcome for the average patient.

The interventions that provide the “greatest good” are defined by research outcomes. Research is generally done on people who are more white, more male, less complex and more privileged than the real “average” patient. Therefore, “evidence” tends to work best in white, privileged people with straightforward illness. So, weirdly, the more we standardise care, the more we drive inequity. This probably doesn’t matter terribly much in brain tumours. But it is deeply problematic in mental health, where who you are is as important as the illness you “have”.

Evidence-based medicine was never conceptualised as bestowing a universal truth. It was meant to be a tool for the craftsman to use, taking into account their professional assessment and the patient’s preferences. This is a more expensive option than standardised care, but it is much better for the outliers.

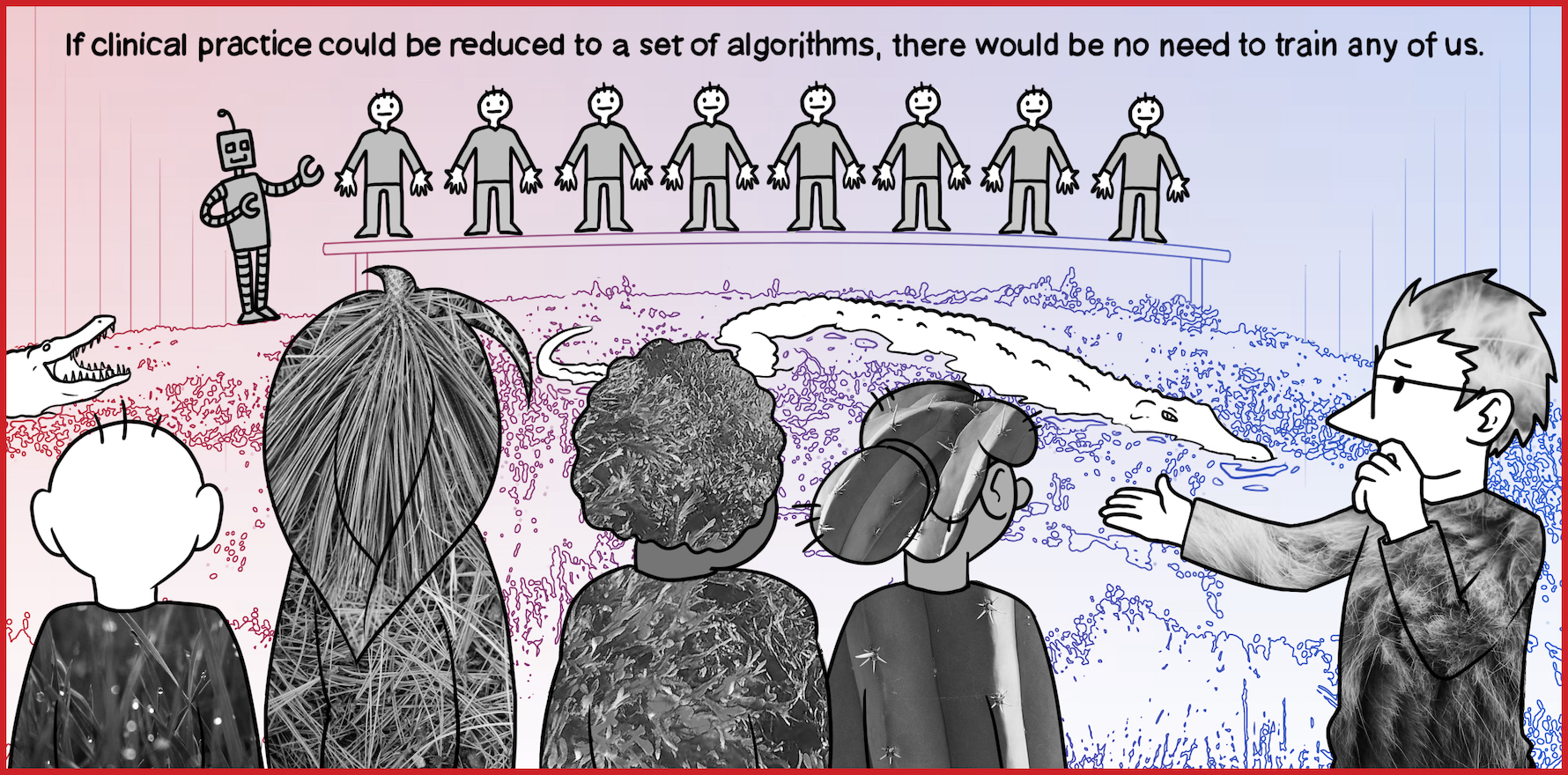

The fundamental difference between standardised and bespoke care is at the heart of much of the conflict between managers or funders and clinicians. Health professionals will always want the best care for the person in front of them. This means individualising care. If clinical practice could be reduced to a series of algorithms, there would be no need to train any of us. We could easily be replaced by a website with an electronic equivalent of a vending machine. The advantage would be a relatively predictable average outcome for the population for a lower and more predictable cost, which would definitely be better for the taxpayer.

The disadvantages fall on the outliers who may have devastating outcomes that are hidden in the data because they are less common.

Our swamp is full of complex patients, and these are the ones who have been ousted from the high ground, because the standardised approaches aren’t working. Nevertheless, there are increasing attempts to standardise swamp-based care using high-ground algorithms, because these algorithms are said to be “evidence-based”. The algorithms are programmed into swamp robots, who drive consumers across preordained paths towards standardised destinations.

Swamp robots

At the moment, technology is seen as an inherent “good” in healthcare. Health professionals who do not use technological solutions are seen as “reluctant”, and in need of “education” rather than clinicians who are evaluating options with a critical lens. Over time, we are exposed to structural change that drives our use of technology-mediated protocols, and the hardware and software that enable their use.

In other words, robots have infested the swamp.

Swamp robots are created by entrepreneurs who lead empires that benefit from them. The robots are shiny. They use numbers, and technology, and SCIENCE. Their algorithms are based on research from expensive institutions who publish in medical journals. They radiate moral superiority. They are GOOD robots, providing GOOD care. Their productivity can be measured and mapped. Robots are cheap, and tireless and can be counted. Increasingly, they make good, patient-centred, relationship-focused care look a bit, well, shabby.

Sometimes, robots are accompanied by lower trained human assistants, who can divert them from their preferred paths if necessary, but only slightly. There are ways of removing clinicians from the interaction altogether, providing standardised apps and e-mental health programs that are universally accessible to all. It is a seductive model and cleans up the messy pathways used by swamp guides. Many of those messy pathways we used to use are now patrolled by the crocodiles, nudging us and our patients towards standardised pathways with robot guidance.

Rich, entitled and educated people can still access patient-centred, rather than “evidence-centred” care, because they can pay for a bespoke service that bypasses the robots. The rest get inappropriate care.

The worst thing about swamp robots is their algorithms are usually based on research that targets first-episode care alone. Therefore, many people who can only afford government subsidised mental health robots get the same cognitive behavioural therapy and mindfulness training over and over and over again until they give up. They are usually counted as successful graduates. However, they are simply exhausted by going back and forth over the same 100m stretch of swamp when what they really need is to progress towards recovery on the other side.

Marketing

What really makes me uneasy is the tactics the swamp entrepreneurs use to market their robots. It reminds me a lot of processed foods. There are buzzwords. In food, it’s “natural”, “organic”, or “lite”. In mental health it’s “evidence-based”, “easy access”, “patient-centred” and “co-designed”. There is fine print in journal articles and privacy statements, but like the labelling on food, it is hard to find and hard to read.

In reality, the evidence for robots is contested. It is not “evidence” if it is generated in a population that is fundamentally different to the patient receiving care. It is not “easy access” if you can’t read, have no device or wifi credit, or you are too cognitively slowed by illness to understand written text. It is not “patient-centred” if you have no other affordable option available to you. “Co-design” isn’t helpful if the people on the committee are nothing like you.

Increasingly, the “evidence” is “lite”. There are trials where less that 20% of the cohort complete the intervention. Most trials exclude disadvantaged populations. There are trials which base their evidence on a tiny cohort of people who bear little resemblance to the population they claim to represent. For instance, the “trial” (of the InnoWell platform, run by Professor Ian Hickie et al.) where 16 older people without depression completed “at least part” of a survey about a depression app and generally found it unhelpful. The conclusion? “These findings highlight the tremendous opportunity to engage older adults with HITs to support their mental health and well-being, either through direct-to-consumer approaches or as part of standard care.”

When “most depressed individuals would be excluded from a typical clinical trial of depression” there is something wrong with our “evidence”.

Nevertheless, this “evidence” becomes enshrined in policy, perhaps because the funders and the researchers become entangled. Like PwC’s part ownership of a company (InnoWell) that began with a government grant of $33 million, built an app, evaluated the app and then wrote the reports underpinning the National Digital Health Strategy (that suggested more use of apps). The conflicts of interest make my head hurt.

Nowhere do we have “results may vary” warnings. Good, dogged, public mental health care gets plain packaging, while Headspace continues to build shiny, purpose-built institutes. Virtue signalling is rife, with the halo of “value-based healthcare” overshadowing the fact that the GP swamp guides doing the slow, relentless care of patients with unfixable suffering are doing good work that the entrepreneurs and robots will never understand. Sadly, these patients rarely get included in co-design processes, because they are usually too unwell, isolated, overwhelmed or unable to cope with the literacy required to contribute to complex processes.

Interestingly, GP swamp guides are often portrayed as “reluctant” to use the robots. We would not be considered “reluctant” to use a drug or device if we thought either was clinically unhelpful. But with technology-based mental health strategies, the low take-up by patients and clinicians is usually cast as a problem with motivation and capability, rather than a rational clinical decision around efficacy and patient preference.

I sincerely believe it’s time for a star rating. There may be papers in academic journals but this doesn’t always ground the claims that a program is “evidence-based”. Patients, or consumers, deserve better than a veneer of science and veiled conflicts of interest. Robots have their place. They are cheap and cheerful and seem to have some evidence of efficacy for some people.

However, assuming they provide “best practice care” for everyone is at best naïve and at worst, deeply harmful for the patients with the most need and the least services. Adding layers of governance to drive GP guides to use them is ineffective, inequitable and causes deep moral distress in clinicians who know they are the wrong tool for their patients.

It is time to govern the robots before they completely destroy our clinical ecosystem and allow the most vulnerable patients to drown.

Associate Professor Louise Stone is a working GP, and lectures in the social foundations of medicine in the ANU Medical School. Find her on Twitter @GPswampwarrior.

Dr Erin Walsh is a research fellow at the Population Health Exchange at ANU. Her current focus is the use of visualisation as a tool for communicating population health information, with ongoing interests in cross-disciplinary methodological synthesis.